How Many Features Should You Have In Machine Learning

Feature Selection in Car Learning: Correlation Matrix | Univariate Testing | RFECV

What is Feature Choice

Characteristic Selection is the procedure used to select the input variables that are most important to your Machine Learning job.

In a Supervised Learning task, your task is to predict an output variable and in some cases, you are express with a few input variables to work with, but at times, yous will have admission to a whole lot of fix of potential predictors or variables. In this case, it can often be harmful to use all of these input variables or predictors in to your model. This is where feature selection comes in.

Why use Characteristic Selection

- Improve Model Accurateness

- Lower Computational Cost

- Easier to Understand & Explicate

Ways to carry Feature Selection

one. Correlation Matrix

A correlation matrix is simply a table which displays the correlation coefficients for different variables. The matrix depicts the correlation between all the possible pairs of values in a table. It is a powerful tool to summarize a big dataset and to identify and visualize patterns in the given data.

A correlation matrix consists of rows and columns that show the variables. Each jail cell in a table contains the correlation coefficient.

Correlation Matrix

Correlation Template Code

2. Univariate Testing

Univariate Feature Selection or Testing applies statistical tests to detect relationships between the output variable and each input variable in isolation. Tests are conducted 1 input variable at a time. The tests depends whether you are running a regression task or a nomenclature job.

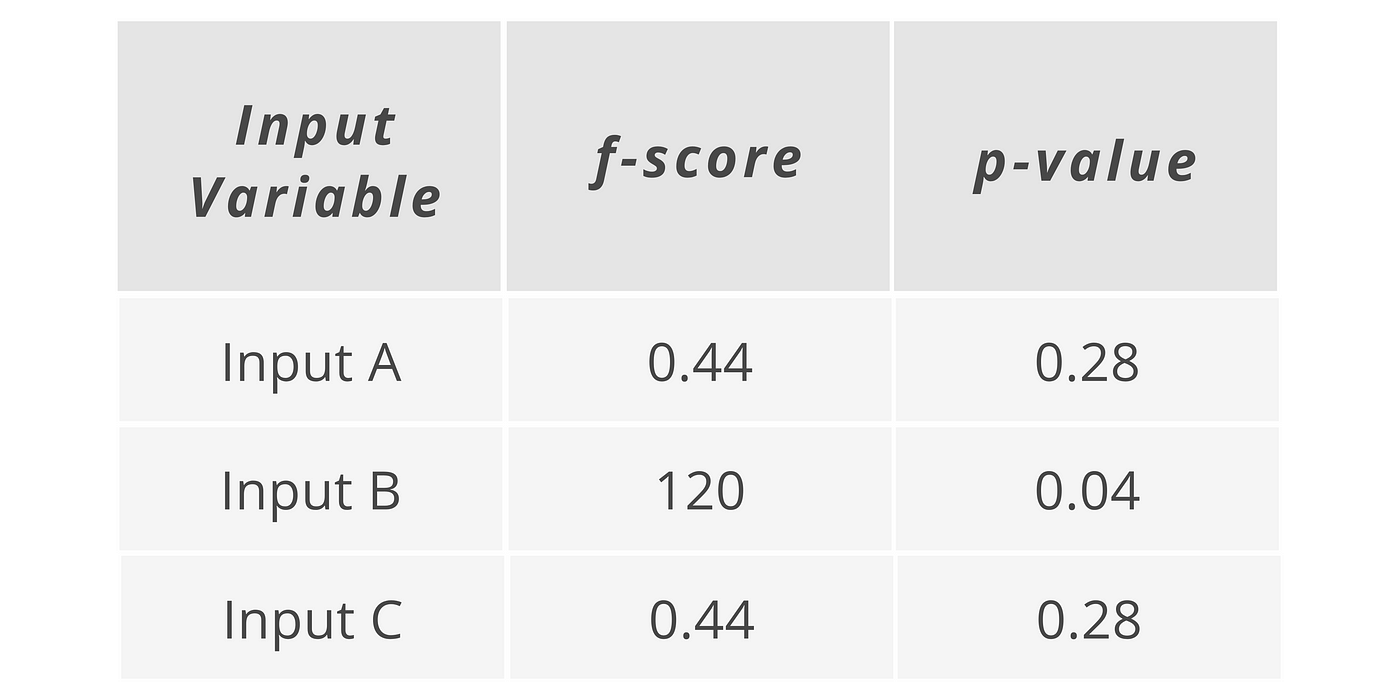

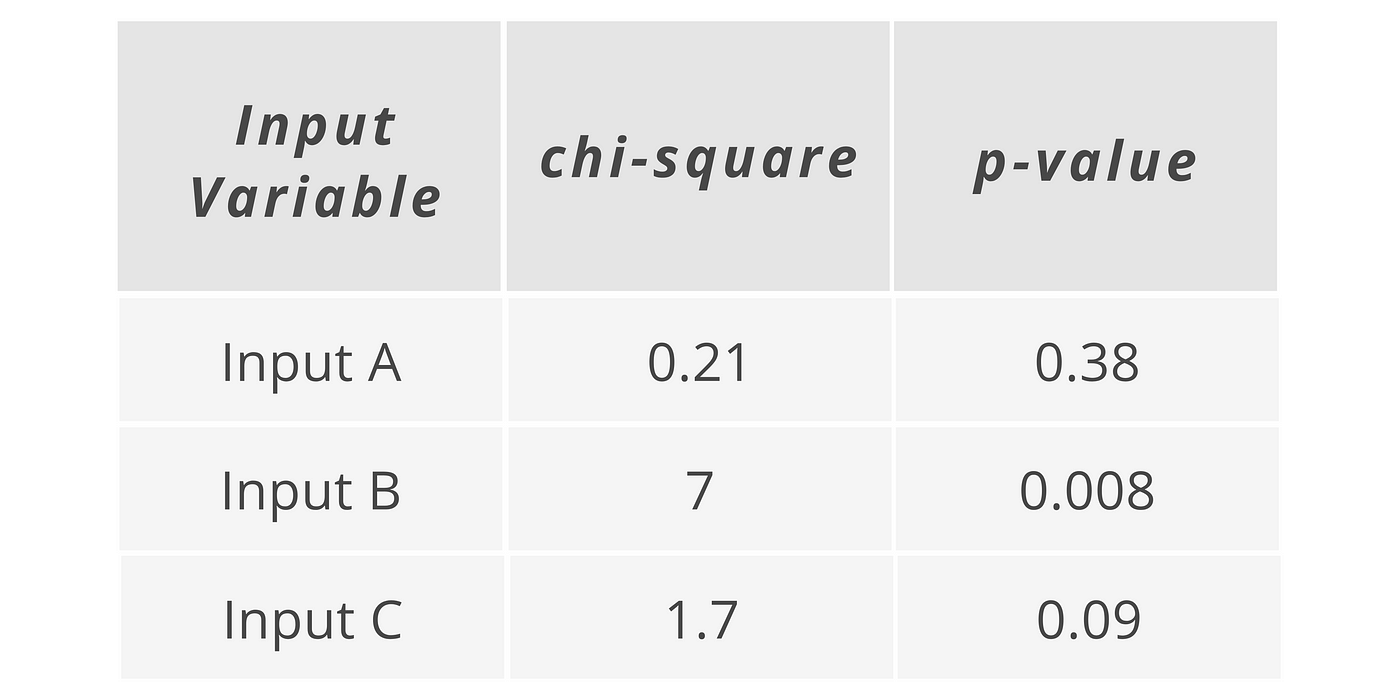

An instance output of these tests are tables showing relationships scores between each input variable and the output. (*see image below)

Regression Task

In a regression task, you may be provided with an f-score and a p-value for each variable and gives you a view of the statistical significance of their relationships between the input and the output variables. This will help you appraise how confident you should exist with the variables you have used in your model.

Univariate Testing: Regression Chore Lawmaking Template

Classification Task

Depending on what test y'all use, yous might exist provided a chi-foursquare score and a p-value for each variable. Again, this gives you lot a view of the statistical significance of their relationships between the input variables and the output variables.

In either Regression or Classification tasks, this will give you a basic information around which variables may exist more important than the others and you could also put a threshold for the statistical exam scores, the p-value or both to say that you only want to include variables that appear to have a reliable relationship with the output variable that you lot are looking to predict.

Univariate Testing: Classification Chore Code Template

Note: The simply downside of univariate testing is that it just considers variables in isolation . It doesn't account variables that are interacting with each other.

3. Recursive Feature Elimination with Cross-Validation (RFECV)

Recursive Characteristic Elimination fits a model that starts with all the input variables, then iteratively removes those with the weakest human relationship with the output until the desired number of features is reached. It actually fits a model instead of just running statistical tests unlike the Univariate Testing.

"RFE is popular considering it is easy to configure and use and because it is effective at selecting those features in a training dataset that are more or nearly relevant in predicting the target variable."

The CV in RFECV means Cross-Validation . Information technology gives you a better agreement on what the variables will be included in your model.

In the Cantankerous-Validation role, it splits the data into different chunks and iteratively trains and validates models on each chunks separately. This simply means that each time yous assess dissimilar models with certain variables included or eliminated, the algorithm as well knows how accurate each model was from the model scenarios that are created and can determine which provided the best accuracy and concludes the best set of input variables to apply.

Recursive Feature Elimination with CV Code Template

How Many Features Should You Have In Machine Learning,

Source: https://medium.com/geekculture/feature-selection-in-machine-learning-correlation-matrix-univariate-testing-rfecv-1186168fac12

Posted by: whitethenetiong1938.blogspot.com

0 Response to "How Many Features Should You Have In Machine Learning"

Post a Comment